Audrey E. Hendricks

Associate Professor of Statistics

University of Colorado Denver | Anschutz Medical Campus

Pathways in Genomic Data Science (PATH-GDS)

Pathways in Genomic Research Experiences for Undergraduates (PATH-GREU)

Dept. of Biomedical Informatics

Dept. of Mathematical and Statistical Sciences

Dept. of Biostatistics and Informatics

Colorado Center for Personalized Medicine

Human Medical Genetics and Genomics Program

Biography

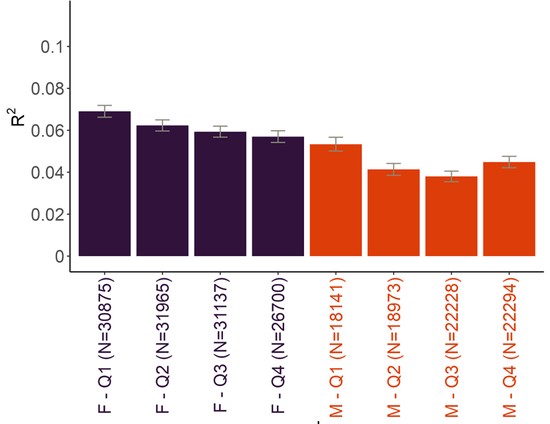

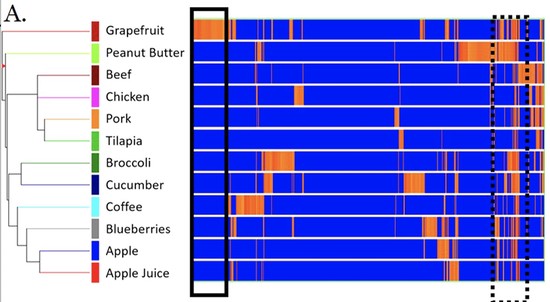

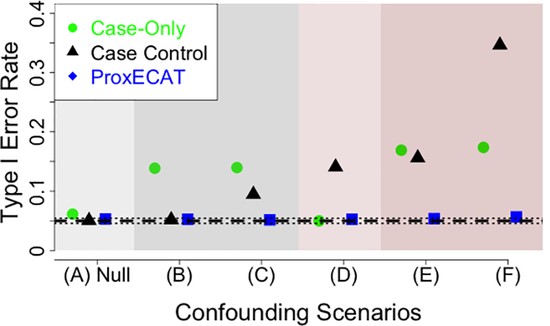

The Hendricks Team is committed to increasing opportunities for all people to learn about statistics, machine learning, and science. We are motivated to ask novel research questions and ensure the research is robust and accurate. The Team works at the intersection of biomedical research and statistical/machine learning method development with current projects including the development of methods to increase the utility of publicly available genetic resources, identifying the biological mechanisms of healthy diets, elucidating the genomic underpinnings of conditions and traits, and most recently, understanding the molecular determinants influencing health and disease over the menopause transition. We follow best practices of reproducibility and robust science by creating open source, well documented software and releasing all data and code used for our studies. Our team is highly collaborative working with people from a variety of backgrounds and education levels. We are always learning, improving, and pushing ourselves and others to be our best. In doing so, we produce first-class research for the broader community and train the next generation of biomedical scientists.

Interests

- Statistics

- Genetics & Genomics

- Data Science for All